QuantumNAS: Noise-Adaptive Search for Robust Quantum Circuits

Hanrui Wang, Yongshan Ding, Jiaqi Gu, Zirui Li, Yujun Lin, David Z. Pan, Frederic T. Chong, Song Han

HPCA 2022 paper / code / poster

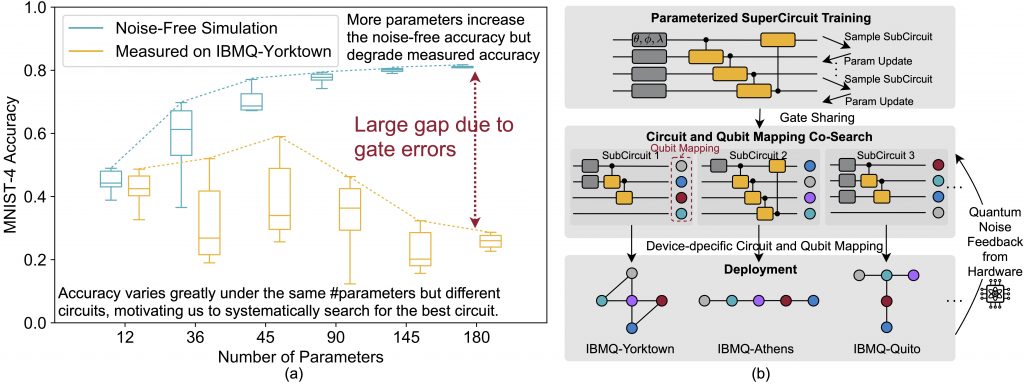

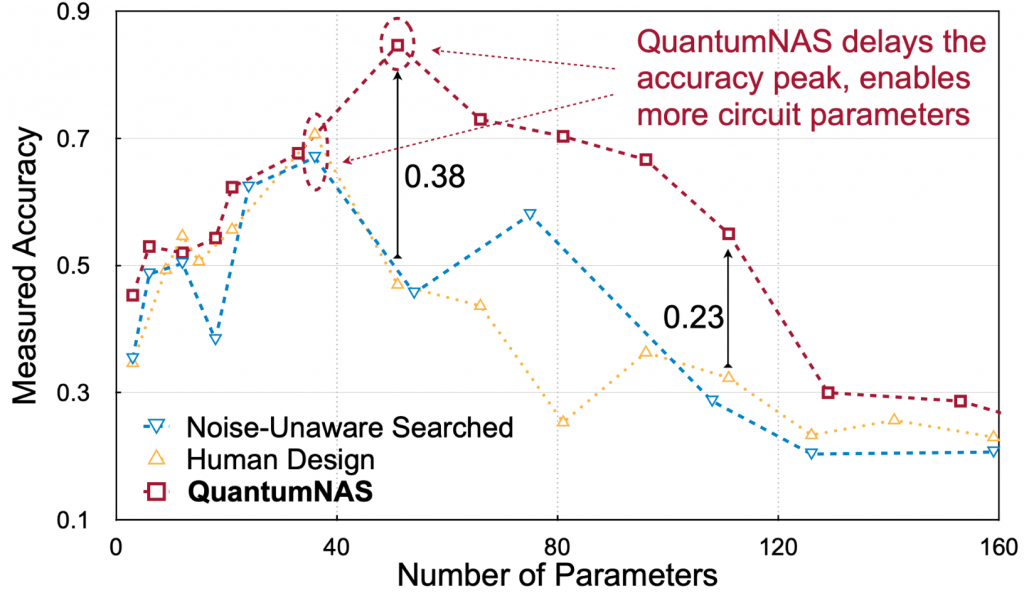

Quantum noise is the key challenge in Noisy Intermediate-Scale Quantum (NISQ) computers. Previous work for mitigating noise has primarily focused on gate-level or pulse-level noise-adaptive compilation. However, limited research efforts have explored a higher level of optimization by making the quantum circuits themselves resilient to noise. We propose QuantumNAS, a comprehensive framework for noise-adaptive co-search of the variational circuit and qubit mapping. Variational quantum circuits are a promising approach for constructing QML and quantum simulation. However, finding the best variational circuit and its optimal parameters is challenging due to the large design space and parameter training cost. We propose to decouple the circuit search and parameter training by introducing a novel SuperCircuit. The SuperCircuit is constructed with multiple layers of pre-defined parameterized gates and trained by iteratively sampling and updating the parameter subsets (SubCircuits) of it. It provides an accurate estimation of SubCircuits performance trained from scratch. Then we perform an evolutionary co-search of SubCircuit and its qubit mapping. The SubCircuit performance is estimated with parameters inherited from SuperCircuit and simulated with real device noise models. Finally, we perform iterative gate pruning and finetuning to remove redundant gates. Extensively evaluated with 12 QML and VQE benchmarks on 10 quantum computers, QuantumNAS significantly outperforms baselines. For QML, QuantumNAS is the first to demonstrate over 95% 2-class, 85% 4-class, and 32% 10-class classification accuracy on real QC. It also achieves the lowest eigenvalue for VQE tasks on H2, H2O, LiH, CH4, BeH2 compared with UCCSD.

QuantumNAS motivation and framework overview:

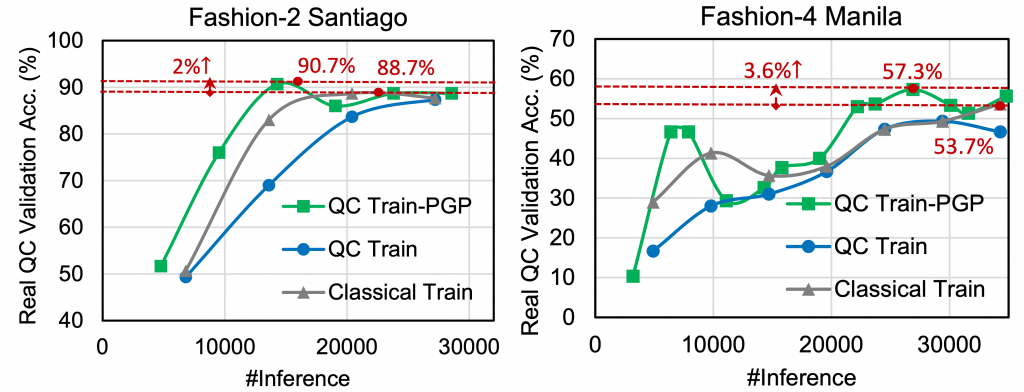

QuantumNAS models achieve higher robustness and accuracy than human baseline and noise-unaware search models:

RobustQNN: Noise-Aware Training for Robust Quantum Neural Networks

Hanrui Wang, Jiaqi Gu, Yongshan Ding, Zirui Li, Frederic T. Chong, David Z. Pan and Song Han

DAC 2022 paper / code

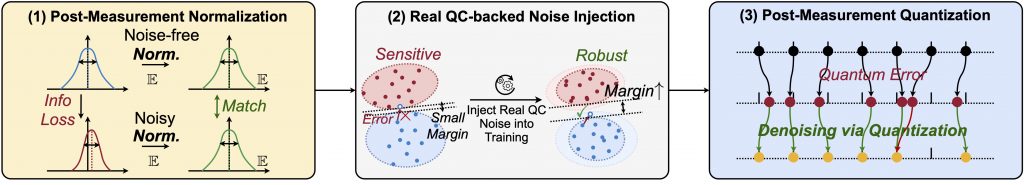

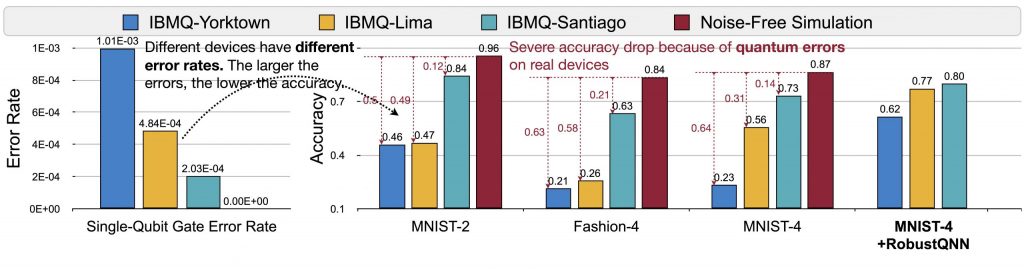

Quantum Neural Network (QNN) is a promising application towards quantum advantage on near-term quantum hardware. However, due to the large quantum noises (errors), the performance of QNN models has a severe degradation on real quantum devices. For example, the accuracy gap between noise-free simulation and noisy results on IBMQ-Yorktown for MNIST-4 classification is over 60%. Existing noise mitigation methods are general ones without leveraging unique characteristics of QNN and are only applicable to inference; on the other hand, existing QNN work does not consider noise effect. To this end, we present RoQNN, a QNN-specific framework to perform noise-aware optimizations in both training and inference stages to improve robustness. We analytically deduct and experimentally observe that the effect of quantum noise to QNN measurement outcome is a linear map from noise-free outcome with a scaling and a shift factor. Motivated by that, we propose post-measurement normalization to mitigate the feature distribution differences between noise-free and noisy scenarios. Furthermore, to improve the robustness against noise, we propose noise injection to the training process by inserting quantum error gates to QNN according to realistic noise models of quantum hardware. Finally, post-measurement quantization is introduced to quantize the measurement outcomes to discrete values, achieving the denoising effect. Extensive experiments on 8 classification tasks using 6 quantum devices demonstrate that RoQNN improves accuracy by up to 43%, and achieves over 94% 2-class, 80% 4-class, and 34% 10-class MNIST classification accuracy measured on real quantum computers.

RobustQNN framework overview:

RobustQNN improves QNN accuracy on real quantum machines:

On-chip QNN: Towards Efficient On-Chip Training of Quantum Neural Networks

Hanrui Wang*, Zirui Li*, Jiaqi Gu, Yongshan Ding, David Z. Pan and Song Han

DAC 2022 paper / code

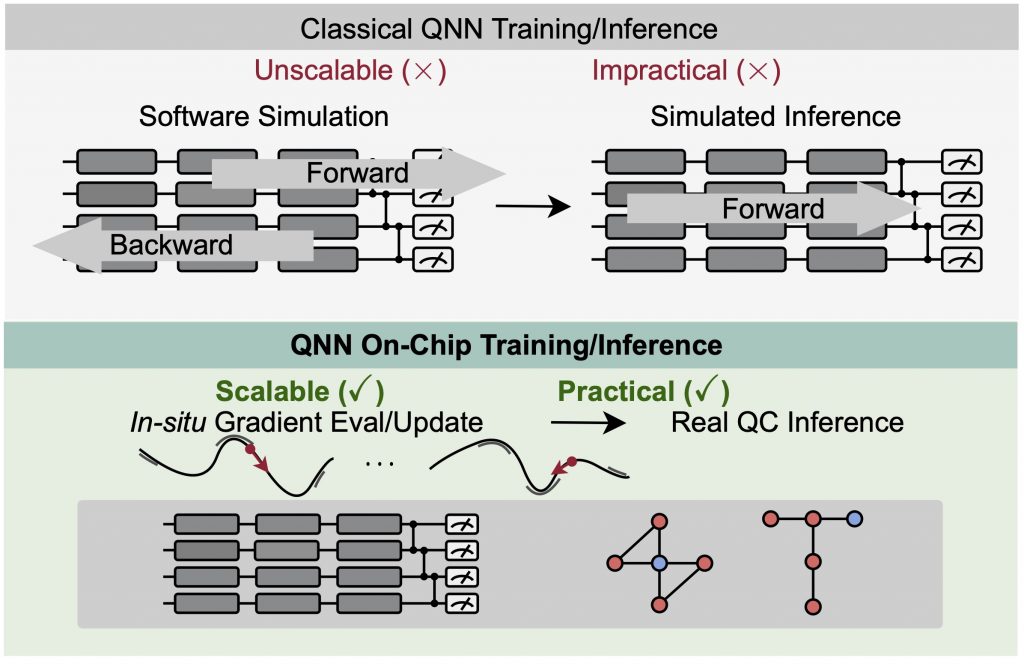

Quantum Neural Network (QNN) is drawing increasing research interest thanks to its potential to achieve quantum advantage on near-term Noisy Intermediate Scale Quantum (NISQ) hardware. In order to achieve scalable QNN learning, the training process needs to be offloaded to real quantum machines instead of using exponential cost classical simulators. One common approach to obtain QNN gradients is parameter shift whose cost scales linearly with the number of qubits. We present On-chip QNN, the first experimental demonstration of practical on-chip QNN training with parameter shift. Nevertheless, we find that due to the significant quantum errors (noises) on real machines, gradients obtained from naïve parameter shift have low fidelity and thus degrade the training accuracy. To this end, we further propose probabilistic gradient pruning to firstly identify gradients with potentially large errors and then remove them. Specifically, small gradients have larger relative errors than large ones, thus having a higher probability to be pruned. We perform extensive experiments on 5 classification tasks with 5 real quantum machines. The results demonstrate that our on-chip training achieves over 90% and 60% accuracy for 2-class and 4-class image classification tasks. The probabilistic gradient pruning brings up to 7% QNN accuracy improvements over no pruning. Overall, we successfully obtain similar on-chip training accuracy compared with noise-free simulation but have much better training scalability.

On-chip QNN framework overview:

On-chip QNN achieve effective QNN training on real quantum machines: